Sending and receiving webstreams with Spectrum Lab

Contents, Introduction

This chapter describes how to send (transmit) or analyse (receive) web audio

streams using Spectrum Lab.

The audio streaming client was complemented with a tiny built-in

audio stream server which,

at the time of this writing, could provide a compressed or non-compressed audio stream for a limited number of remote clients.

- Contents, Introduction

- Analysing web streams

- Sending streams to the web

- Logging web streams (compressed audio data) as disk files

- VLF 'Natural Radio' audio streams

-

Operation as audio stream server

- Internals

Receiving or sending audio samples via serial port ("COM") or UDP port

Streaming audio (and a 'live' waterfall) through SL's Web Server (based on OpenWebRX, using just an internet browser)

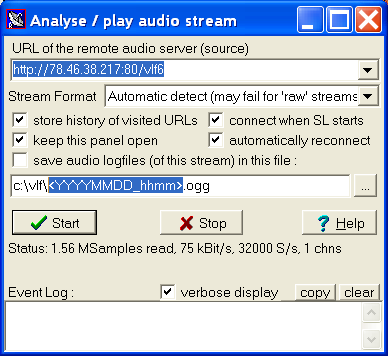

Analysing web streams (SL acting as client)

To open an internet audio stream for playback and analysis, select 'File' (in the main menu), Audio Files & Stream, Analyse and Play Stream / URL .

An input box opens with the previously visited stream URLs. Pick one from

the list, or enter the complete URL

(*) of the audio stream in the

edit field.

To store the URL for the next session, set the checkmark 'save URL history'. If you are concerned about privacy, leave it unchecked.

Next, click "Ok". The program will try to establish a connection with the

Internet (or, depending on the URL, with an audio stream server in your

LAN).

This may take a second or two. After successfully receiving a few kilobytes

of data from the stream (and, ideally, confirming SL can "handle" the format),

the stream URL and the stream's sampling rate will automatically be copied

into SL's Input Device / Stream / Driver configuration.

- Technical background / info:

- This is done on purpose, because normally, Spectrum Lab needs to "prepare"

its internal processing chain depending on the number of input channels,

and on the input sampling rate, before actually opening an

input device / stream / hardware driver for input.

Thus, to 'be prepared' for analysing / playing the web stream when launching Spectrum Lab for the next time, the above parameters (stream URL, sampling rate, and number of channels when applicable) will be saved on exit in the "machine-dependent configuration" (e.g. MCONFIG.INI), for historic reasons in the "[Soundcard]" section, parameter "AudioInputDeviceOrDriver", which in this particular case is the URL, easily recognizeable by the prefix identifying the network protocol like "tcp://" or "http://".

Optionally, the program can re-connect a stream when the connection broke down.

To allow the network to recover after errors (DSL- or WLAN problems, etc),

the program will wait for a few seconds before attempting a new connection.

In more recent SL versions (since 2022), the 'Analyse / play audio stream'

panel also shows some info intended for debugging (troubleshooting stream-related

issues). There's a small text box from which info can be copied & pasted

into emails (etc). The info provided there may vary, so here just a few of the

parameters usually shown there (the amount may vary, depending on

the audio stream format and the presence of periodic timestamps):

- Status: 123 MSamples read, ..

This is the number of sample points received from the stream, since Spectrum Lab connected to the remote source.

- kBit/s:

The number of 'kilobits per second', i.e. the network bandwidth occupied by this stream on your DSL- or whatever internet connection. Since most of the streams use compression, the average number of 'kilobits per second' is usually much lower than the 'number of samples per second' multiplied by the number of 'bits per sample' !

- 1 (or whatever) chns:

Number of channels in the stream. Usually one (the 'timestamp' channel is not counted as an extra channel).

- 12345 tstamps:

Number of timestamps received along with the audio samples since Spectrum Lab connected to the remote source.

- Timestamp latency: 12.3 sec

Difference, in seconds, between the last received timestamp and the PC's local time (converted to UTC).

If this parameter slowly creeps up (or down) by a few seconds per hour, there may be something wrong the local system time, or the remote sender's timestamp source, or (if the difference increases) there's a backlash building up somewhere in the network, between the 'sender' and the 'receiver'.

Due to multiple stages of buffering in the remote receiver, the digitial signal processing at the RX site, the 'stream server', the internet, etc, timestamp latencies of several seconds are 'normal'. Timestamp latencies over a minute are 'unusual', and negative timestamp differences indicate a problem (troubleshoot this first by synchronising your PCs local time with a reliable internet time sync service).

- RX Buffer: x.x sec

Receive buffer usage, measured in 'seconds of audio'.

It this parameter slowly creeps up, there may be something wrong with the soundcard's output sampling rate. For example, if SL 'thinks' the soundcard's output SR is 48000.0 samples per second, and resamples the stream to exactly that rate, but in fact the soundcard only 'consumes' 47990.0 S/s (short for "Samples per second"), either the stream's receive-buffer will fill up (until it overflows), or the audio output device's buffer will fill up. Only if the 'output device' is a soundcard (accessed via multi-media API), If the 'Timestamp Latency' or 'RX Buffer usage' creep up while playing back the stream, you can fix it by letting SL resample the output to the precise output sampling rate as described here ('Output Resampling').

Notes on the URL format

The URL must contain the complete address of the audio stream resource.

- (*) Note on the URL:

-

In most cases, SL is able to resolve the stream URL if the given URL is not

an Ogg resource, but an M3U redirector (aka "playlist").

But some websites respond with an error when the HTTP 'GET' request doesn't originate from a webbrowser, or doesn't carry a bunch of red tape (which SL doesn't support), or doesn't use HTTP Version 1.1 (SL only uses HTTP/1.0).

- Examples (see also: Live VLF Natural Radio streams):

http://dradio.ic.llnwd.net/stream/dradio_dkultur_m_a.ogg( Deutschlandradio Kultur from Germany .. moved to an unknown location )

- http://127.0.0.1/_audiostream.ogg to connect

to the built-in audio web server of another instance of SL

running on the same machine, with its audio server configured for HTTP. - tcp://someones_callsign.duckdns.org:4xxx was a 'raw TCP' (no http)

ELF stream from a remote observatory. More on those precisely timestamped streams later.

- Use your imagination to connect to Spectrum Lab running on another PC in your local network. Only the IP address will be different (definitely not 127.0.0.1). You can see it on the other machine by looking at SL's HTTP server control panel, or on SL's Audio Stream Server control panel.

If it doesn't work, copy the URL into the address bar of your favourite web browser (if the web browser isn't able to play the audio, chances are low that this program will be able to play it). See next chapter for details about the supported audio stream formats, and the supported network protocols.

Often, the 'real' stream resource is hidden by an awful lot of Javascript or other stuff. In that case, playing around with the web browser can often reveal the 'real' stream URL. Spectrum Lab makes no attempt to execute Javascript, only to find out the real URL. In fact, it cannot execute browser scripts.

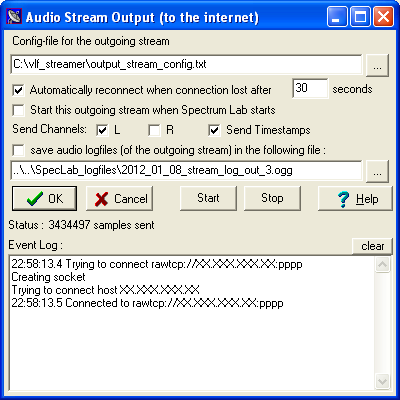

Sending audio streams to a remote server (SL acting as client)

This chapter describes how Spectrum Lab can be configured to send one audio stream

to a remote server. In this case, SL acts as client, which means it initiates the connection,

and then starts sending live audio to the remote server.

This function was first used to feed live data from VLF receivers

to the Live VLF Natural Radio server

(with high-speed internet connection) operated by Paul Nicholson.

In contrast to the server, the streaming client as described below only requires a moderate

DSL connection. For example, an monophone Ogg/Vorbis stream with 32000 samples/second,

and Vorbis 'Quality' set between 0.4 and 0.5 required about 64 ... 75 kBit/second.

In SL's file menu, select Audio Stream Output. This opens a control panel for the audio stream output.

The control on this control panel are:

- Configuration file for the outbound stream

-

That file contains all the details which the program must know to send the

stream. It's a plain text file which you have to write yourself, and store

in a safe place.

(you will need some info about the remote server for this - see next chapter)

The stream configuration file is not a part of the Spectrum Lab configuration (only the name of the file, but not the file itself). This eliminates the risk of passing passwords and other 'private' details along with a normal configuration file. - Automatically reconnect, Start output stream when Spectrum Lab starts:

-

Used for 'unattended operation'.

- Send Channels:

-

Defines which of the input audio channels you want to send (left / right

audio channel, or both), and whether to send

GPS-based timestamps in an Ogg stream or not.

- Save audio logfiles:

-

With this option, a "copy" of the outbound audio stream (Ogg/Vorbis) will

be saved on your harddisk. The dotted button near the input field opens a

file selector.

Configuration of the output stream

The configuration file may look like this:

; Configuration file for an OUTBOUND audio stream . ; Loaded by Spectrum Lab shortly before starting to send a stream. ; server: <protocol>://<host>:<port> . protocol: rawtcp or http . server = rawtcp://127.0.0.1:1234 ; password: some audio streaming servers may ask for it password = ; format: only required for non-compressed streams, default is Vorbis-compressed ogg ; format = ogg ; default ; format = uncompressed,int8 ; format = uncompressed,int16 ; format = uncompressed,int32 ; format = uncompressed,float32 ; quality: see Vorbis documentation; 0.5 seems to be a good default value quality = 0.5

Empty lines, or lines beginning with a semicolon, are ignored by the

parser.

At the moment, the keywords shown in the example

('server', 'password', 'format', 'quality')

are the only recognized keywords in the stream configuration file.

Everything else is silently ignored.

A template for an output stream configuration file is contained in the installation archive,

see configurations/output_stream_config_dummy.txt .

Non server-specific options are defined in the dialog box shown above. Only

those data entered in the configuration dialog will be saved in

a *.usr or *.ini file by Spectrum

Lab.

The stream configuration file will never be modified by SL - it

will only be read but never written (again, it's your task to write it with

a plain text editor).

Notes on unattended operation

For long term operation, without regular supervision, you should..

- Set the option 'Automatically reconnect when connection lost' on the control panel shown above.

- To let SL start streaming without your intervention as soon as it is launched, set the option 'Start output stream when Spectrum Lab starts' on the same panel.

-

Turn off annoying screen savers, automatic hibernation, etc.

-

Stop windows from rebooting your PC without your permission !

Don't forget the settings in the 'windows security center' or 'windows action center' (..what a name..).

(in german: "Updates herunterladen, aber Installationszeitpunkt manuell festlegen"). If you turn off automatic updates in the 'security center' (or whatever MS decided to call that thing today), check for system updates yourself.

Otherwise, you may find your audio stream broken down for a few days, just because windows decided to reboot your PC after installing a security update.

Alternative (not recommended): -

Leave automatic updates enabled (i.e. let windows update your system whenever

it thinks necessary), and find out how to let windows launch Spectrum

Lab automatically after each reboot.

Under Windows XP, this was possible by placing a link to the program in the 'Autostart' folder.

But you will also have to find out how to disable the login procedure at system boot, etc... especially under "Vista" and later versions you're on your own at this point.

- If the purpose is to feed timestamped VLF streams to Paul Nicholson's Live VLF Natural Radio server, consider using Paul's VLF Receiver Toolkit (aka VLF-RX-tools) under Linux.

Logging web streams

This is possible with received (inbound) as well as transmitted (outbound)

streams. The stream data in Ogg/Vorbis audio format (plus the optional timestamp

channel) can be logged as a file on the harddisk or similar.

Because the storage format of an Ogg file is exactly the same as

an Ogg stream, the stream data are written without any conversion,

so this causes no significant additional CPU load. Also, logging the audio

data this way saves a lot of disk space compared to the wave file format.

At the moment, SL does *not* generate a different name for the logfile (with

a sequence number, or date+time in the filename) but this may change in future

versions.

Templates to specify filenames with date and time

On a number of occasions, the name of the logfile should contain the current date and time

as part of the filename. This could be achieved by using a string expression

evaluated by Spectrum Lab's interpreter, but the following formats

(templates) with special placeholders are easier to use, and faster to enter:

Instead of typing

c:\vlf\20150115_2000.ogg

in the file selector dialog, and adjusting the name for the current date each time before starting the stream, you can enter

c:\vlf\<YYYYMMDD_hhmm>.ogg

which works similar as the interpreter function str(),

but it always uses the system's current date and time (in UTC), and replaces

the 'time and date placeholders' as in the format string

for various interpreter functions in Spectrum Lab.

The parser recognizes any template by the angle brackets (and removes them), because neither

'<' nor '>' are valid characters in a normal filename.

The example shown above will produce a filename with the current year, month, day, hour,

and minute in an ISO8601-like fashion. The placeholders for hour, minute and second are lower-case

letters to tell 'mm' (two-digit minute) from 'MM' (two-digit month).

The following characters are automatically replaced by date- and time fields, when placed between

angle brackets as shown in the example:

YY = Year, two digits

YYYY = Year, four digits

MM = Month, two digits

MMM = Month-NAME (Jan, Feb, Mar, Apr, ...)

DD = Day of month, two digits

hh = hour of day, two digits

mm = minute, two digits

ss = second, two digits

Any other character placed between angle brackets will not be replaced.

Thus the underscore used in the example appears as a 'separator'

between date (YYYYMMDD) and time (hhmm)in the filename.

The angle brackets themselves cannot appear in the filename (under windows, '<' and '>'

are no valid characters in a filename anyway).

The same principle (filename templates with current date and time) can also be used in other places

within the program, for example when starting to record the input from, or output to

the soundcard as an audio file.

VLF radio streams

At the time of this writing, the following live VLF

radio streams had been

announced

in the VLF group. The IP addresses may have changed, so if the links don't work anymore,

please try Paul's VLF audio stream overview at VLF streams at abelian.org/vlf/.

http://78.46.38.217/vlf1.m3u ( "VLF1" from Todmorden, UK, via Paul

Nicholson's Natural Radio server; HTTP; no timestamps)

http://78.46.38.217/vlf3.m3u ( "VLF3" from Sheffield, UK )

http://78.46.38.217/vlf6.m3u ( "VLF6" from the SL author's receiver near Bielefeld, Germany)

http://78.46.38.217/vlf9.m3u ( "VLF9" from Cape Coral, Florida )

http://78.46.38.217/vlf15.m3u ( "VLF15" from Cumiana, Northwest Italy )

http://78.46.38.217/vlf19.m3u ( "VLF19" from Sebring, Florida )

http://78.46.38.217/vlf25.m3u ( "VLF25" from Hawley, Texas )

http://78.46.38.217/vlf34.m3u ( "VLF34" from Surfside Beach, South Carolina )

http://78.46.38.217/vlf35.m3u ( "VLF35" from Forest, Virginia )

Note: When selecting the preconfigured setting 'Natural Radio' / 'Sferics and Tweeks'

(in the 'Quick Settings' menu), some of the above URLs will be copied into the

URL History which can be recalled from the 'Analyse / Play Stream' dialog.

GPS-timestamped VLF radio streams

The following VLF live streams can only be played with the

VLF Receiver Toolkit under Linux, or

Spectrum Lab under Windows (since V2.77 b11). Note that 'rawtcp' is not an

official protocol name, thus your browser will not know "what to do" with

the above pseudo-URLs. But you can copy and paste them into URL input box

shown in chapter 1.

rawtcp://78.46.38.217:4401 ( "VLF1" from Todmorden, on Paul

Nicholson's Natural Radio server; with GPS-based timestamps)

rawtcp://78.46.38.217:4406 ( "VLF6" from Spenge near Bielefeld,

raw stream with GPS-based timestamps)

rawtcp://78.46.38.217:4416 ( stereo combination of VLF1 from

Todmorden (L) and VLF6 from Spenge (R), with timestamps)

rawtcp://78.46.38.217:4427 ( stereo combination of VLF1 from

Todmorden (L) and VLF6 from Marlton (R), with timestamps)

More timestamped VLF streams will hopefully be available soon, since they

can provide valuable information for research - see discussion and announcements

about ATD (arrival time difference calculations), origins and spacial

distribution of Whistler entry points, etc; in the VLF group.

As a motivation for prospective VLF receiver operators, the next

chapter describes how to configure Spectrum Lab for sending such (GPS-)

timestamped audio streams.

Setting up a timestamped VLF 'Natural Radio' audio stream

Prior to 2011, some of the VLF streams listed above used the combination of Spectrum Lab + Winamp + Oddcast as described here. Since the implementation of Ogg/Vorbis in Spectrum Lab, plus Paul Nicholson's extension to send GPS-based timestamps in his VLF receiver toolkit, the transition from the old (non-timestamped) MP3 based streams to the Ogg/Vorbis streams (with or without timestamps) has become fairly simple.

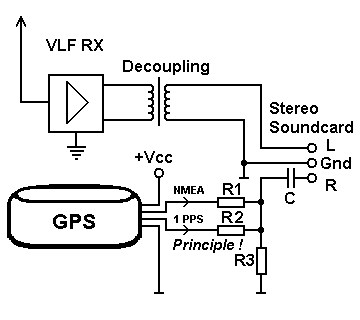

First of all, if possible at your receiver's location, consider sending a timestamped audio stream. All you need in addition to your hardware (VLF receiver, a soundcard with hopefully a stereo 'Line' input) is a suitable, despite cheap, GPS receiver. The author of Spectrum Lab had good success with a Garmin GPS 18 LVC (emphasis on 'LVC', since the Garmin GPS 18 "USB" doesn't deliver the important PPS output). If you are interested in the principle of GPS pulse synchronisation, also look here (later).

Here is a typical VLF receiver setup (hardware), with a GPS receiver delivering precise UTC time and date (through its serial NMEA data output) and the sync pulse ("PPS", typically one pulse per second):

R1 and R3 form a voltage divider for the NMEA data, to reduce the amplitude to an acceptable level for the soundcard.

R2 and R3 forms a different divider for the 1-PPS (sync signal with one pulse per second). Important: The divided voltage of the 1-PPS voltage must be higher than the voltage of the NMEA (as seen by the soundcard), otherwise the software has difficulties to separate them. Also, the input of the soundcard must not be overloaded / clipped on any channel.

Capacitor "C" is not really required - it's only there to emphasize that a 'DC' coupling between the GPS receiver and the input to the soundcard is not necessary.

Fortunately, in all GPS receivers tested so far (Garmin GPS 18 LVC, and a Jupiter unit) had no overlap between PPS and NMEA.

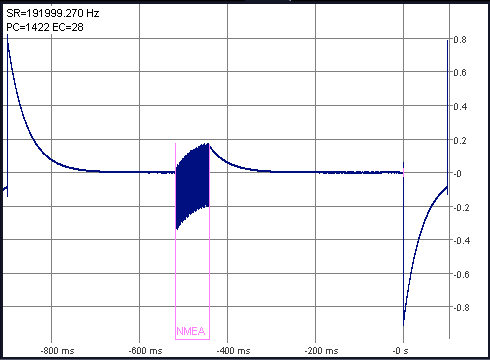

A Garmin GPS 18 LVC with a relatively new firmware (anno 2011) produced this combined waveform on the 'R' audio input:

A suitable configuration to send a timestamped VLF stream with the hardware shown above is contained in the Spectrum Lab installation.

To load it, select File (in SL's menu), 'Load Settings From', and pick the file 'Timestamped_VLF_Stream_Sender.usr' from the configurations directory.

Further reading:

- Principle and hardware for GPS synchronisation (in Spectrum Lab; includes recommended levels of the combined NMEA + PPS signal, etc)

- Checking the GPS NMEA data output / position display (shows GPS position, number of satellites currently in use, GPS quality indicator (HDOP), height above sea level, and GPS speed (depending on the GPS receiver's configuration)

- Configuration of the Ogg/Vorbis output stream (internet uplink to the remote VLF stream server)

- GPS 18x Technical Specifications (by Garmin, external link)

- Search the web for "RS Components GPS 18 LVC" .. the order code at RS component used to be 445-090; and their price was fair in 2011.

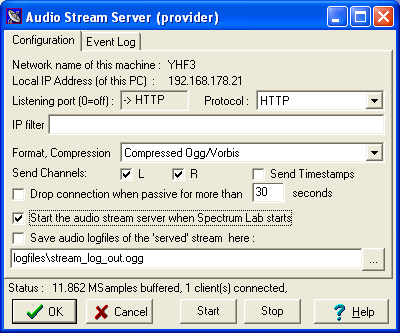

Operation as audio stream server

For ad-hoc tests, and only for a limited number of clients,

SL can also operate as server (which can stream audio to remote clients).

The network protocol doesn't necessarily have to be HTTP (Hypertext Transfer Protocol);

audio streams can also use 'raw TCP' (without HTTP) as transport layer.

- Note:

- The audio stream server described in this chapter

has nothing in common with SL's OpenWebRX-compatible web server !

Per definition, the server listens on a TCP port for incoming connections from remote clients. The server can only accept a limited number of connections, usually limited by the 'upstream' bandwidth of a typical ADSL connection.

To stream audio over the internet, it is recommended to use the Ogg/Vorbis compression.

In a local network (LAN), better performance (especially for very weak signals "buried in the noise") can be achieved by streaming non-compressed samples.

To configure SL's built-in audio streaming server, select

'File' .. 'Audio Stream Server' from the main menu.

The audio stream server inside SL usually uses HTTP as the transport protocol.

In that case the port of Spectrum Lab's integrated HTTP server is used (default: 80),

as configured on SL's HTTP server control panel.

- Hint:

- To open the HTTP server control panel from the Audio Stream Server panel,

first set 'Protocol:' to 'HTTP' as shown in the above screenshot.

The 'Listening port' on the Audio Stream Server panel will be grayed,

and shows -> HTTP to indicate that that the HTTP server's port number cannot be configured here, but on the HTTP server's own control panel.

Click into the grayed field showing '-> HTTP' to open the HTTP Server panel.

To embed the audio stream in a webpage (e.g. audiostream.html in SL's "server pages"), put an HTML5 <audio> element into it.

- Note:

- As often, "that certain browser" sucks.. it only supports the patent-encumbered mp3 format but not Ogg Vorbis.

As usual, the solution is try another browser... Firefox, Opera, Safari and most other browsers supported Ogg at the time of this writing.

To test the audio stream server without a browser, a second instance of Spectrum Lab

can be started, for simplicity on the same PC. In that case ("local" test on the same PC),

enter the URL http://127.0.0.1/_audiostream.ogg

in the audio stream receiver (client), as explained in the chapter about

analysing web streams.

If the protocol isn't HTTP but (raw) TCP, the server's listening port must be entered

in decimal form. Similar as described here for the HTTP server,

you may have to check with netstat if the intended port number

isn't occupied by the operating system, or by another application running

on the same PC. If the audio streaming server shall be accessable

"from the internet", use a similar procedure as described

here for the HTTP server.

Note that not all windows versions will pop up a warning if they (or the

computer's Firewall) have blocked a certain port number.

Audio stream server troubleshooting

If the remote audio stream receiver (client, web browser) doesn't start playing, open the Event Log tab in the Audio Stream Server (provider) panel.Similar as Spectrum Lab's HTTP Server Event Log, the audio server's event log shows accepted connections from remote clients, along with their IP addresses, and the TCP port used for the stream (not to be confused with the 'listening' port, which is usually 80 for HTTP). If all works as planned, the log would show something like this:

2020-06-30 14:27:00.8 Opened Vorbis stream ouput server

2020-06-30 14:29:58.4 Connected by 127.0.0.1:51648, initial headers ok, 11025 samples/sec

2020-06-30 14:30:58.4 Disconnected from 127.0.0.1:51648, 482.8 kByte sent

2020-06-30 08:38:12.6 Opened Vorbis stream ouput server

2020-06-30 08:41:18.3 Connected by 127.0.0.1:51549, initial headers n/a, pse wait

2020-06-30 08:42:18.3 Disconnected from 127.0.0.1:51549, 84.00 Byte sent

The HTTP server itself usually closes the connection if there's no traffic in any direction after some time (e.g. 60 seconds). This is what deliberately happened in the 'bad' example shown above with red background. The 84 bytes sent were only the HTTP response header, which only the HTTP Server Event Log (but not the Audio server event log) will show. Use the 'VERBOSE' option to see request- and response headers there. Example (traffic as seen by the HTTP server):

21:27:28.050 HTTP Server Started: YHF6 on port 80

21:27:43.726 Connection #0 accepted on socket 1020 from 127.0.0.1

21:27:43.726 AudioStreamServer: Connected by 127.0.0.1:52519, initial headers ok, 11025 samples/sec

21:27:43.726 Connection #0 C:\cbproj\SpecLab\server_pages\_audiostream.ogg

21:27:43.726 Connection #0 rcvd hdr[388]="GET /_audiostream.ogg HTTP/1.1

Host: 127.0.0.1

User-Agent: Mozilla/5.0 (Windows NT 6.3; Win64; x64; rv:77.0) Gecko/20100101 Firefox/77.0

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8

Accept-Language: de,en-US;q=0.7,en;q=0.3

Accept-Encoding: gzip, deflate

DNT: 1

Connection: keep-alive

Upgrade-Insecure-Requests: 1 ( <- this is one of MANY header lines ignored by this server)

Cache-Control: max-age=0"

21:27:43.726 Connection #0 sent response header[44]="HTTP/1.1 200 OK

Content-Type: audio/ogg"

21:28:27.325 Socket 1020 closed by remote client despite KEEP_ALIVE : no error

21:28:27.325 Connection #0 closed, socket 1020, ti=43.6 s, rcvd=388, sent=392562 byte.

If there's no sign of life from a remote client in the audio server log at all, and the protocol is HTTP, also open Spectrum Lab's HTTP Server Event Log to check for problems with the HTTP listening port (especially the notorious problem of Windows or other applications 'occupying' the HTTP listening port (80 by default). For details, see 'HTTP server troubleshooting'.

Internals

Supported formats

Compressed formats

At the moment, only Ogg / Vorbis audio is supported. Optionally,

the Ogg container may contain (sic!) an extra logic stream for the GPS-based

timestamps. These streams are compatible with

Paul Nicholson's VLF receiver toolkit,

which is available as C sourcecode along with a detailed documentation at

abelian.org/vlfrx-tools/.

MP3 streams, as well as MP3 files, are not supported to avoid hassle

with Fraunhofer's software patents.

The "Content Type" (in the HTTP response from the remote server) must be

application/ogg or audio/ogg.

Only for raw TCP connections the program will recognize ogg streams from

the first four bytes ("OggS") .

ADPCM compressed audio is only supported in Spectrum Lab's OpenWebRX-compatible web server,

using the WebSocket protocol, not 'simple' HTTP.

Non-compressed formats

Supported data types (at the time of this writing, 2014-04-27) :

8 bit signed integer (range +/-127, the pattern 0x80 is reserved to identify headers)

16 bit signed integer (range +/-32767, 0x8000 is reserved to identify headers)

32 bit signed integer (range +/-2147483647, 0x80000000 is reserved to identify headers)

32 bit floating point (range +/- 1.0)

64 bit floating point (range +/- 1.0)

As usual on Intel- and most ARM-CPUs, use little endian format (aka 'least significant byte first').

To allow automatic detection of the sample format, it is recommended to insert 'stream headers' at the sending end.

Any header begins with the unique pattern 0x80008000 (in hexadecimal notation as a 'doubleword').

Some of the non-compressed formats can also be used when streaming audio over a serial port

(aka "COM port" or "Virtual COM Port" under windows) into Spectrum Lab,

with the intention to keep the microcontroller firmware (which may drive an external A/D converter) as simple as possible.

The header structures for non-compressed streams are specified below (as C sourcecode) :

typedef struct VT_BLOCK // compatible with VT_BLOCK defined in vlfrx-tools-0.7c/vtlib.h

{ // DON'T MODIFY - keep compatible with Paul's VLF Tools !

int32_t magic; // should contain VT_MAGIC_BLK = 27859 = Data block header

# define VT_MAGIC_BLK 27859

uint32_t flags; // See VT_FLAG_* below

uint32_t bsize; // Number of frames per block

uint32_t chans; // Number of channels per frame;

uint32_t sample_rate; // Nominal sample rate

uint32_t secs; // Timestamp, seconds part

uint32_t nsec; // Timestamp, nanoseconds part

int32_t valid; // Set to one if block is valid

int32_t frames; // Number of frames actually contained

int32_t spare;

double srcal; // Actual rate is sample_rate * srcal (8 byte IEEE double precision float)

} T_VT_Block;

// Bit definitions for VT_BLOCK and VT_BUFFER flags field. Copied from vlfrx-tools/vtlib.h :

#define VTFLAG_RELT (1<<0) // Timestamps are relative, not absolute

#define VTFLAG_FLOAT4 (1<<1) // 4 byte floats (8 byte default)

#define VTFLAG_FLOAT8 0

#define VTFLAG_INT1 (2<<1) // 1 byte signed integers

#define VTFLAG_INT2 (3<<1) // 2 byte signed integers

#define VTFLAG_INT4 (4<<1) // 4 byte signed integers

#define VTFLAG_FMTMASK (VTFLAG_FLOAT4 | VTFLAG_INT1 | VTFLAG_INT2 | VTFLAG_INT4) // Mask for format bits

typedef struct tStreamHeader

{

uint32_t dwPattern8000; // pattern 0x80008000 (0x00 0x80 0x00 0x80, little endian order),

// never appears in the 16-bit signed integer samples because

// THEIR range is limited to -32767 ... +32767 .

// Also very unlikely to appear in a stream of 32-bit floats.

# define STREAM_HDR_PATTERN 0x80008000

uint32_t nBytes; // total size of any header, required for stream reader

// to skip unknown headers up to the next raw sample .

// Must always be a multiple of FOUR, to keep things aligned.

// Example: nBytes = 4*4 + sizeof(VT_BLOCK) = 16 + 10*4+8 = 64 bytes .

// (assuming that 'int' in struct VT_BLOCK is, and will always be, 32 bit)

uint32_t dwReserve; // 'reserved for future use' (and for 8-byte alignment)

uint32_t dwStructID; // 0=dummy,

// 1=the rest is a VT_BLOCK as defined in vlfrx-tools-0.7c/vtlib.h

// (etc... more, especially headers with GPS lat/lon/masl will follow)

# define STREAM_STRUCT_ID_NONE 0

# define STREAM_STRUCT_ID_VT_BLOCK 1

} T_StreamHeader;

typedef struct tStreamHdrUnion

{ T_StreamHeader header;

union

{ T_VT_Block vtblock; // used when header.dwStructID == STREAM_STRUCT_ID_VT_BLOCK

}u;

} T_StreamHdrUnion;

Timestamped Audio Streams

Both compressed and non-compressed audio streams (see previous subchapters) may optionally contain precise timestamps, sent periodically along with the T_StreamHeader and/or VT_BLOCKs (compatible with the VLF-receiver-toolkit).Precisely timestamped audio streams allow experiments with radio direction finding when combined on a suitable server, such as the VLF TDOA experiments carried out by Paul Nicholson - see description of his VLF-RX-toolbox.

To send a timestamped VLF audio stream from your receiver, you will need a suitable GPS receiver (equipped with a sync pulse output) as described here.

When receiving a timestamped stream, the timestamps can be used to 'lock' phases of oscillators to those timestamps (instead of the sync pulses from a GPS receiver) as explained here.

Supported network protocols

At the moment, the reader for compressed audio streams only supports HTTP and 'raw' TCP/IP connections. These protocols are recognized by the begin of the URL (or pseudo-URL):

- http://

-

Hypertext transfer protocol. This is the common protocol (also for audio

streams) used in the internet.

Spectrum Lab's HTTP server can also stream audio via HTTP, alternatively also via 'Web Socket' for OpenWebRX.

- tcp://

- Uses a TCP/IP connection, not HTTP; and usually some kind of 'binary header' or 'block' structure, at least at the begin of a stream. This protocol is also used to feed audio into VLF Natural Radio stream servers. The token "tcp" is not an officially recognized protocol; it's only used inside Spectrum Lab to leave no doubts about the protocol.

- rawtcp://

-

Also uses a TCP/IP connection, not HTTP; and typically no 'headers'

or 'block structure', but raw, non-compressed samples (e.g. 16-bit

integer samples) in the stream, with nothing else.

When receiving a stream, there is no real difference between

'tcp://' and 'rawtcp://' because on both cases, Spectrum Lab

will examine the first received bytes for 'headers' or 'blocks'.

If the 'rawtcp'-stream does contain headers or VT_BLOCKs (see previous

chapter), SL will automatically route the received stream to the

appropriate decoder.

Like "tcp", the token "rawtcp" is not an officially recognized protocol, but leaves no doubt that the upper network protocol layer is NOT HTTP.

- Note:

- Besides the protocols listed above, the parser for "pseudo-URLs" in Spectrum Lab

also recognizes a few other protocols, but they cannot be used for audio streaming (yet?).

For example, pseudo-URLs are also used as 'address' for certain

remotely controllable radios with LAN or WLAN interface,

audio streaming via UDP (in the local network or between multiple instances running

on the same machine), etc. Even though the following are not used for

'web streams' (..yet?), they are listed here for completeness:

- udp://<device name>:<port number>

- Send to, or receive from, the specified 'device' (by its hostname),

using UDP (User Datagram Protocol). Typically used for remote control

of modern radios, like Icom's IC-9700 or IC-705.

In this case, the 'default' device name is the model name of the radio, e.g. IC-705

(note the hyphen in there, it's a valid character in a hostname).

The colon is used as separator between the hostname (or numeric IP address) and the port number.

Note: To tell Icom's incredibly complex audio-via-UDP protocol from the beautifully simple "Audio-via-UDP" as used in Spectrum Lab, for the latter, the 'raw' sample format must be appended in the 'params:' field as explained here (originally written for "Audio-via-COM-port", but the very same formats like 8/16/24/32-bit integer, signed/unsigned, little/big endian, can also be sent to, or received from, UDP ports instead of a 'COM' ports).

- udp://localhost:<port number>

- Typically used to exchange audio via UDP between applications running on the same 'machine'

(PC), aka "local host". Here, with only IPv4 supported, "localhost" is the same as the

numeric dummy-IP-address "127.0.0.1". Packets ('datagrams') directed to this pseudo-URL

don't really 'travel on a wire' (or wireless LAN) but are managed internally by the

PC's network driver software.

Stream Test Application

During program development, a simple test utility was written as replacement

for a remote audio stream server (audio sink for Ogg/Vorbis encoded webstreams).

The sourcecode and executable (a windows console application) included in the

'Goodies' subdirectory after installing Spectrum Lab.

The "C" sourcecode contains a short description.

When launching the executable (TCP_Dummy_Sink_for_AudioStreams.exe) without parameters,

it will open TCP port 12345 and listen for connections. Only one connection can be handled

at a time. After being connected (for example, from Spectrum Lab), the utility will write

all received data into a file, beginning with the file "test000.ogg". This name was chosen

because in most cases, Ogg/Vorbis encoded streams will be sent by Spectrum Lab.

For each new accepted connection (after the previous one has been disconnected),

the file sequence number is increased, a new file will be written (test001.ogg, test002.ogg, etc etc).

If the connection works as it should (and nothing gets lost "on the way"), the contents of the

received file (written by the "TCP Dummy Sink" utility) will be exactly the same as the

stream audio logfile (optionally written by Spectrum Lab, while sending the stream).

Interpreter commands to control web audio streams

The prefix "stream." is used for group of commands to control audio streams:-

stream.start_input

This command has the same effect as clicking 'Start' in the 'Analyse / play stream' control panel.

Note: It may take a few milliseconds until the stream really stops, because the interpreter command just sets a flag that is polled in another thread. The same applies to similar commands listed further below.

-

stream.stop_input

This command has the same effect as clicking 'Stop' in the 'Analyse / play stream' control panel.

Last modified : 2023-07-05

Benötigen Sie eine deutsche Übersetzung ? Vielleicht hilft dieser Übersetzer - auch wenn das Resultat z.T. recht "drollig" ausfällt !

Avez-vous besoin d'une traduction en français ? Peut-être que ce traducteur vous aidera !